In early 2026, Nigeria found itself confronting a new threat that blends technology with deception: AI-generated impersonation. As artificial intelligence tools become more powerful and accessible, deepfake videos and fake social media narratives have begun to affect the reputations and public voices of well-known Nigerians. Among the most striking examples this year are incidents involving filmmaker Stephanie Linus, former Oyo State First Lady Florence Ajimobi, and social media influencer Funke Bucknor-Obruthe. Each of these personalities have publicly pushed back against misleading content falsely attributed to them.

Stephanie Linus Raises Alarm Over Fake AI Scam

On January 23, 2026, Stephanie Linus, a respected Nollywood filmmaker and advocate, took to Instagram to warn her followers about a fake video created using artificial intelligence that was circulating online. According to her post, the video used her face and voice, as well as the name of a media organization, to promote a fraudulent investment scheme. Linus wrote in capital letters, “FAKE AI VIDEO SCAM ALERT!!! This is becoming too much. … This video is not from me. It is 100% fake and created by scammers to deceive people.”

She urged people not to share the video, to report the accounts distributing it, and to protect their friends and family from falling for the scheme. Linus also said authorities and digital platforms had been notified about the content.

Linus explicitly stated that the deepfake used her identity to push a scam, leveraging the trust her name carries. Her statement made it clear that seeing a familiar face or hearing a familiar voice online no longer guarantees authenticity in a world where AI can produce convincing fakes.

Florence Ajimobi Denounces a Deepfake Political Clip

Almost simultaneously, Florence Ajimobi, the widow of late Governor Abiola Ajimobi and a public figure in her own right, found herself defending her reputation against another AI-driven fabrication. A video circulated online that appeared to show her making controversial statements about the upcoming 2027 Oyo State gubernatorial elections, suggesting a political “war” between parties.

Mrs Ajimobi reportedly made the statement in June 2025 during the 5th memorial prayer of her late husband, former Oyo State Governor Abiola Ajimobi, held in Ibadan, Oyo State.

In the full video, she is heard saying:

“We’re going to war in 2027. It’s going to be war, head-on war, PDP must go.

“Whatever they want to do, whatever they want to try, whatever tricks, all powers belongs to God. But we’re going to beg God and were going to fight them.

“Whatever they have in the state we have at the federal level, we have money, they have money in the state, we have money in the federal level, we have all the support we need. We are not going to be intimidated. So let them get ready for us, we’re ready for them.”

Ajimobi and her team responded with a formal statement, calling the video a “deepfake”.

A deepfake is an AI-generated fabrication in which lip movements, face, body and voice are digitally altered so that they appear to be someone else, typically used maliciously or to spread false information.

In her statement, she described the footage as “a synthetic fabrication created using generative AI,” designed to mislead the public and distort political discourse. Ajimobi urged citizens, media outlets, and security agencies to treat the clip as a malicious hoax and not share it without verification. She also said her legal team might pursue action against those responsible.

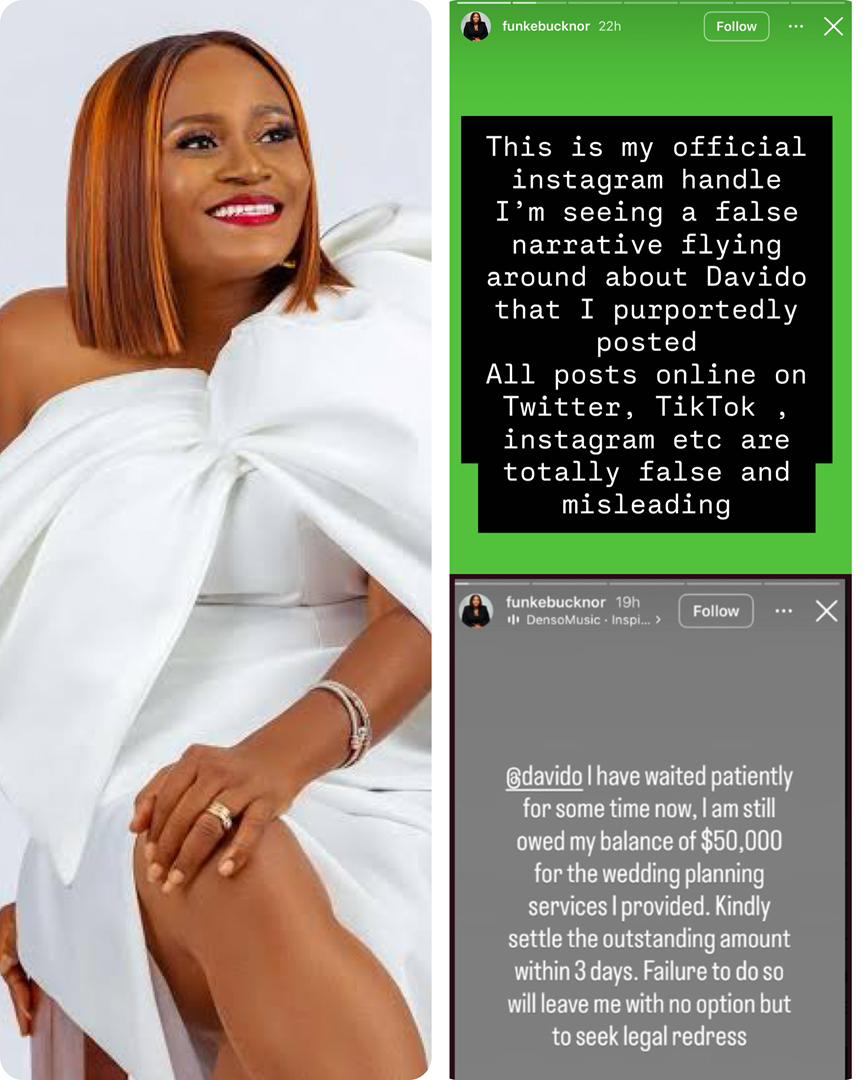

Funke Bucknor-Obruthe Addresses False Social Media Claims

Around the same period, influencer and event planner Funke Bucknor-Obruthe found herself reacting to a false narrative circulating about her on Instagram and other platforms. Some posts wrongly suggested she had made statements about Nigerian artist Davido and financial matters involving his wedding. The post which carried similar username reads:

“@davido I have waited patiently for some time now, I am still owed my balance of $50,000 for the wedding planning services I provided. Kindly settle the outstanding amount within 3 days. Failure to do so will leave me with no option but to seek legal redress.”

Bucknor-Obruthe took to social media shortly after to clarify that the narrative was entirely “false and misleading”, rejecting any association with the posts and urging followers not to believe the rumours.

Although no deepfake video was involved in Bucknor-Obruthe’s situation, her experience underscores how easily false content can spread online. Whether through AI-generated media or simply misinformation and fake accounts using a person’s name to attract attention.

The Bigger Picture: Why These Incidents Matter

These high-profile cases come at a time when deepfakes and AI impersonations are no longer rare or easily dismissible. In fact, researchers have noted a dramatic rise in AI-generated misinformation being shared online that requires forensic review to distinguish real from fake.

The technology that makes these deepfakes possible also makes them convincing. According to experts, AI models can study public photos, video clips, and recordings of individuals, then generate new content that mimics how they look and sound, sometimes convincingly enough to deceive even experienced viewers.

In Nigeria, where the use social media is widespread and digital literacy varies among the population, these AI impersonation incidents pose significant challenges. False narratives and fake videos can spread rapidly before fact-checkers or authorities can intervene, which can have real consequences for the individuals involved and for public trust in general.

Currently, Nigeria’s legal framework, including the Nigeria Data Protection Act and the Cybercrime Act, provides some protections against identity theft and misuse of personal information. However, these laws were designed before AI-driven impersonations became a global issue and do not directly address the unique challenges posed by deepfake technology.

Discussion about this post